Google Analytics, while being by far the most popular tool in its segment, does have a few limitations that can make this, otherwise nearly perfect tool, unsuitable for a large number of companies.

The main limitations of Google Analytics are related to sampling and data collection limits. Most affected are companies that can’t afford the premium 360 version of Google Analytics (~150k/year) but still have a good amount of traffic visiting their websites. In general, Google Analytics properties with >1M sessions/month or >10M hits/month are being affected by some heavy sampling and data collection limits.

In this article, we’re going to cover the different types of limitations present in the free version of Google Analytics and provide solutions/workarounds to all of them. Oh, and the solution, in most cases, does not include buying the 360 version.

Types of limitations in Google Analytics

Here’s a quick overview of the different types of limitations that come with the free version of Google Analytics.

Sampling in reports

Sampling on the reporting level means that, even if Google Analytics collected 100% of the hits, the reports you are seeing in the user interface are not based on 100% of those hits/sessions. In general, sampling starts if the period you are looking at contains more than 500k sessions in total. It can start a lot sooner, though, when more advanced custom segments are in use.

Data collection limits

From the official documentation.

If a property sends more hits per month to Analytics than allowed by the Analytics Terms of Service, there is no assurance that the excess hits will be processed. If the property’s hit volume exceeds this limit, a warning may be displayed in the user interface and you may be prevented from accessing reports.

Data collection limits that apply to all free Google Analytics accounts.

- up to 10M hits total per month

- 200,000 hits per user per day

- 500 hits per session

Data processing latency

Processing latency in Google Analytics is up to 24-48 hours. Standard accounts that send more than 200,000 sessions per day to Analytics will result in the reports being refreshed only once a day. This can delay updates to reports and metrics for up to two days.

This makes Google Analytics quite useless for things like monitoring real-time performance, detecting usability issues, automated reports on an hourly basis, real-time product recommendations, and other use cases that require fresh data (i.e. machine learning, marketing automation).

Custom dimensions and metrics

There are 20 indices available for different custom dimensions and 20 indices for custom metrics in each free Google Analytics property. 360 accounts have 200 indices available for custom dimensions and 200 for custom metrics.

While 20 is plenty for a small business with a simple website, this number can become quite limiting if you need custom dimensions for things like A/B testing data (one for each live experiment), e-commerce variables, user-specific information, and more.

Aggregated metrics and no access to raw data

Unfortunately, in the free version of Google Analytics, there is no way to access the raw hit-level data. This means that if the way it calculates certain metrics or aggregates hits into sessions doesn’t suit your business requirements then you’re out of luck – without access to raw data, you can’t define your own calculations or aggregations.

Also, in case you would like to analyze the user journey of a specific visitor, there is no way to easily query and analyze all hits from one visitor in a timely order.

Google Analytics 360 does have a BigQuery export but, by default, the metrics and sessions are still defined and pre-aggregated just like in the reports.

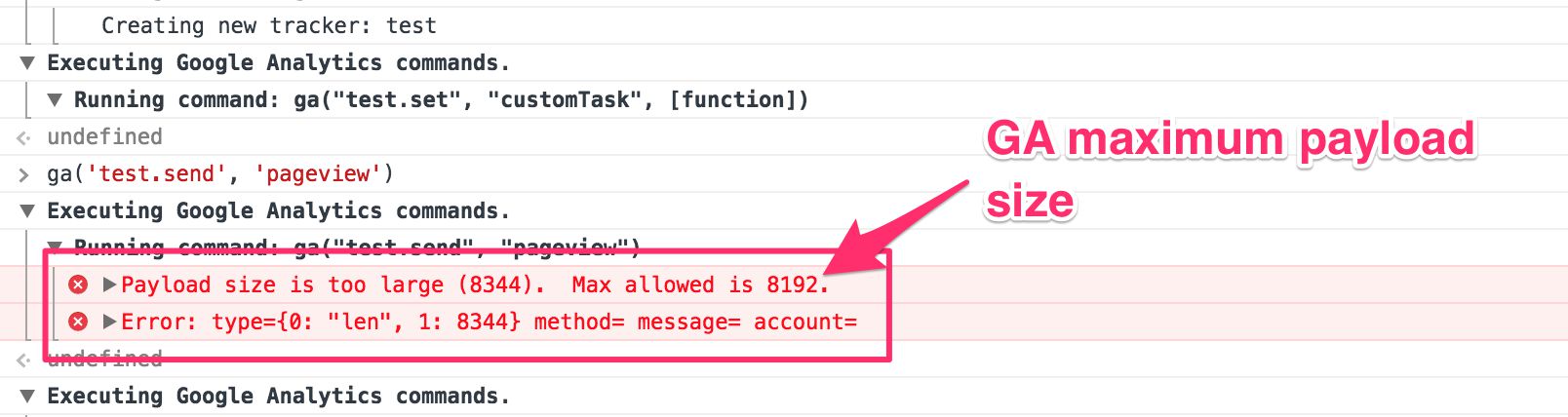

Hit payload limited to 8000 bytes

Depending on your analytics setup, some of your hits may include a lot data – ecommerce product impressions, several custom dimensions etc. The Google Analytics hit payload size is limited to 8000 bytes, this means that all hits greater than this will be ignored by Google Analytics’ data processing engine and your dataset ends up being incomplete. What’s worse, Google Analytics doesn’t alert you when this is happening!

This affects both the free and 360 versions of Google Analytics.

Workarounds to sampling and other limitations

Now, let’s take a look at the same list of limitations and provide a solution/workaround to each of them.

Sampling in reports

Option 1 – Divide your queries into smaller chunks and join data using another tool (Excel, Pandas, R). This can be done manually in the UI, using the Reporting API, or by using a library for your favorite programming language (i.e. gago for Go) that has an “anti sampler” feature built-in.

Option 2 – Collect raw hit-level Google Analytics data in your data warehouse (i.e. BigQuery). This process is known as parallel tracking and functions by duplicating all hits that are sent to your Google Analytics property into your data warehouse. This allows you to write ad-hoc queries in standard SQL without any sampling.

Data collection limits

Option 1 – To get past the 10M hits/mo, 200,000 hits per user per day and 500 hits per session limits, the same Google Analytics parallel tracking solution can be used. This means that even if Google Analytics starts to skip some hits, they’re still available in your data warehouse and ready to be queried.

Option 2 – Set up multiple Google Analytics properties. This can be a little tricky but it is possible to divide your Google Analytics integration into multiple properties. Depending on how much traffic your site gets, you might divide all hits from the first week of the month into one property, hits from the second week into another property etc. Alternatively, you could divide hits based on the Client ID into as many groups/properties as needed. Then, you can use the Reporting API to pull data from all properties and join them using Excel, Pandas or some other tool.

Data processing latency

If you need to monitor the site’s performance in real-time or need data for product recommendations or other machine-learning efforts, the 24-48 hour delay is probably way too long. Your only option to get access to near-real-time Google Analytics data is to use the parallel tracking technology. With parallel tracking, processed hit data will be available in ~5 seconds after it was collected from your site. This allows you to build alert systems, monitor performance in real-time or feed your machine learning algorithms with fresh data at all times.

Custom dimensions and metrics

As mentioned earlier, Google Analytics free version limits you to 20 indices of custom dimensions and metrics. If you hit the limit and need to add more, your first action should be to check whether there’s a dimension or metric that you no longer need. If that’s not the case, your only solution is to either upgrade to Google Analytics 360 (to get 200 metrics and dimensions) or to use parallel tracking and get access to unlimited custom metrics and dimensions. When using parallel tracking, dimensions and metrics with indices 20+ will not show up in Google Analytics reports but are available in your data warehouse.

Aggregated metrics and no access to raw data

If you’re not happy with how Google Analytics aggregates data into sessions or calculates certain metrics, you need access to the raw hit-level data that allows you to define your own rules for almost any kind of aggregation or calculation. There are two options you can sue to get access to raw hit-level Google Analytics data.

Option 1 – You guessed it! Parallel tracking sends the raw hit-level Google Analytics data into your data warehouse (with no sampling) and you can use standard SQL to query your data and tools like Excel, R or Pandas to make all sorts of calculations and aggregations. BigQuery has a native connection with most BI and data visualisation tools so reporting is easy and flexible.

Option 2 – If your Google Analytics property is not affected by the data collection limits (it gets <10M hits/mo), you could configure your custom dimensions to collect data like Client ID, Session ID, Hit Timestamp and Hit Type. With all these dimensions available in your dataset, you can use the Reporting API to pull the raw hit-level data into your local machine or data warehouse. If your property receives more than 10M hits a month, this option is only available if you split your data between multiple properties.

Hit payload limited to 8000 bytes

If your enhanced ecommerce transactions include many products or you have lots of custom dimensions or for some other reason some of your hits exceed the 8000-byte limit then you are losing data. Google Analytics will, without any warnings, simply skip those hits. You have three options for solving this issue.

Option 1 – Send less data by removing some data points. Simo Ahava has written a great blog post on automatically reducing the Google Analytics payload length by removing some of the unnecessary data points and creating an order for removing other data points until the required payload size is reached.

Option 2 – Use the data import feature in Google Analytics to import extra information after the data has been sent to Google Analytics. This way you can send only the most important information (Client ID, transaction ID etc.) with the main hit and import other details (product information, custom dimensions etc.) later using the import feature. Check out this post by Dan Wilkerson for a detailed guide for doing this with Google Tag Manager.

Option 3 – With parallel tracking your hits are limited to 16 000 bytes (double from Google Analytics) and this can be raised even more on request.

Key takeaways

Now that you have a pretty good overview of the limitations that come with the free version of Google Analytics and some that come with the 360 (premium) version, it’s a good time to assess your own Google Analytics implementation(s). Does any of the limitations affect your data and its accuracy? May some of the limitations become an issue in the future?

Knowing the limitations of a tool is extremely important. You may be investing a lot of time and money into a setup that may not be sufficient anymore as your business grows.

With all its limitations, Google Analytics is still an extremely powerful tool that has an awesome community. If sampling or some of the other limitations are an issue for you, I believe you can fix them with some of the solutions suggested in this blog post.

Most of the sampling and data collection limitations of Google Analytics are solvable with parallel tracking, a system that sends raw hit-level Google Analytics data into BigQuery (or any other data warehouse) in real-time.

***

Should you have any other issues with Google Analytics or have an alternative solution to any of the problems mentioned in this post, please share your thoughts in the comments below.

One of our properties is receiving 100M + hits a month (I know…) and sampling has been killing the productivity of our analysis.

This parallel tracking solution seems to have at least some potential in our case. Wonder about the pricing, though? Anyway, going to give it a try and hopefully it can manage our numbers.